PTL Developer's Guide

Using pbs_benchpress

How to run pbs_benchpress

Go to pbspro/test/tests in your cloned GitHub directory structure.

Syntax:

pbs_benchpress <option(s)

Privilege

By default, pbs_benchpress automatically makes its current user a PBS Manager, via "qmgr -c 's s managers += < current user>".

Options to pbs_benchpress

--cumulative-tc-failure-threshold=<count>

Cumulative test case failure threshold. Defaults to 100.

Set count to 0 to disable this threshold.

If not zero, must be greater than or equal to 'tc-failure-threshold'

--db-access=<path to DB parameter keyword file>

Path to a file that defines DB parameters (PostreSQL only); can be absolute or relative.

See https://www.postgresql.org/docs/current/static/libpq-connect.html#LIBPQ-PARAMKEYWORDS.

You probably need to specify the following parameters:

dbname: database name

user: user name used to authenticate

password: password used to authenticate

host: database host address

port: connection port number

--db-name=<database name>

Database where test information and results metrics are stored for later processing.

Defaults to ptl_test_results.db.

--db-type=<database type>

Can be one of "file", "html", "json", "pgsql", "sqlite".

Defaults to "json".

--eval-tags='<Python expression>'

Selects only tests where Python expression evaluates to True. Can be applied multiple times.

Example:

'smoke and (not regression)' - Selects all tests which have "smoke" tag and don't have "regression" tag

'priority>4' - Selects all tests which have "priority" tag and where the value of priority is greater than 4

--exclude=<tests to exclude>

Comma-separated string of tests to exclude

-f <test files to run>

Comma-separated list of test file names

-F

Sets logging format to include timestamp and level

--follow-child

If set, walks the test hierarchy and runs each test. Starts from current directory.

-g <path to test suite file>

Absolute path to file containing comma-separated list of test suites

Used for very long list of tests

--genhtml-bin=<path to genhtml binary>

Defaults to "genhtml": PTL assumes genhtml is in PATH

-h

Displays usage information

-i

Prints test name and docstring to stdout, then exits.

-l <log level>

Specifies log level to use. Log levels are documented in PTL.

-L

Prints the following to stdout, then exits: test suite, file, module, suite docstring, tests, and number of test suites and test cases in current directory and all its subdirectories.

--lcov-baseurl=<url>

Base URL for lcov HTML report. Uses url as base URL.

--lcov-bin=<path to lcov binary>

Defaults to "lcov": PTL assumes lcov is in PATH

--lcov-data=<path to directory>

Path to directory containing .gcno files; can be absolute or relative

--lcov-nosrc

If set, do not include PBS source in coverage analysis.

By default, PBS source is included in coverage analysis

--lcov-out=<path to output directory>

Output path for HTML report.

Default path is TMPDIR/pbscov-YYYYMMDD_HHmmSS

--list-tags

Recursively list all tags in tests in current directory and its subdirectories.

--log-conf=<path to logging config file>

Absolute path to logging configuration file. No default.

File format specified in https://docs.python.org/2/library/logging.config.html#configuration-file-format.

--max-postdata-threshold=<count>

Max number of diagnostic tool runs per testsuite. Diagnostic tool is run on failure. Defaults to 10 sets of output from diagnostic tool.

Set count to 0 to disable this threshold.

--max-pyver=<version>

Maximum Python version

--min-pyver=<version>

Minimum Python version

-o <logfile>

Name of log file.

Logging output is always printed stdout; this option specifies that it should be printed to specified log file as well.

-p <test parameter>

Comma-separated list of <key>=<value> pairs. Note that the comma cannot be used in value. Pre-defined pairs are in 'Custom parameters:' in http://pbspro.org/ptldocs/ptl.html#ptl.utils.pbs_testsuite.PBSTestSuite but you can add your own along with those.

--param-file=<parameter file>

If set, gets test parameters from file

Overrides -p

--post-analysis-data=<post-analysis data directory>

Path to directory where diagnostic tool output is placed.

--stop-on-failure

If set, stop when any test fails

-t <test suites>

Comma-separated list of test suites to run

--tags=<list of tags>

Selects only tests that have tag tag. Can be applied multiple times.

Format: [!]<tag>[,<tag>]

Examples:

smoke - Selects all tests which have "smoke" tag

!smoke - Selects all tests which don't have "smoke" tag

smoke,regression - Selects all tests which have both "smoke" and "regression" tag

--tags-info

Lists all selected test suites

When used with --verbose, lists all test cases

Must be used with --tags or --eval-tags

You can use -t or --exclude to limit selection

--tc-failure-threshold=<count>

Test case failure threshold per testsuite

Defaults to 10

To disable, set count to 0

--timeout=<seconds>

Duration after which no test suites are run

--verbose

Shows verbose output

Must be used with -i, -L or --tags-info

--version

Prints version number to stdout and exits

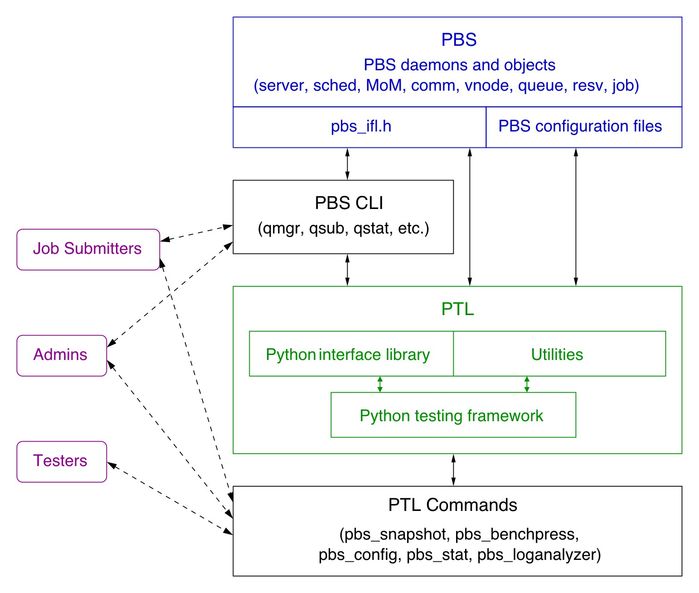

PTL Architecture

Contributing to PTL

Process for Improving PTL Framework

The process for improving the PTL framework is the same as the process you use for changing source code in PBS Professional.

PTL Directory Structure

Contents of pbspro/test/fw/:

| Directory | Description of Contents | |||

|---|---|---|---|---|

| fw | ||||

| bin | Test command: pbs_benchpress PTL-based commands: pbs_config: for configuring PBS and PTL for testing Commands that are not currently in use: pbs_as: for future API mode | |||

| doc | Documentation source .rst files | |||

| ptl | PTL package | |||

| lib | Core Python library: PBSTestLib Supporting files for PBSTestLib: pbs_api_to_cli.py, pbs_ifl_mock.py | |||

| utils | PBS test suite: pbs_testsuite.py containing PBSTestSuite (which is Python testing framework) Utilities PTL provides, such as pbs_snaputils.py, pbs_logutils.py, pbs_dshutils.py, pbs_crayutils.py, etc. | |||

| plugins | Nose plugins for PTL framework | |||

Contents of pbspro/test/tests/:

| Directory | Description of Contents | |

|---|---|---|

| tests | ||

| functional | Feature-specific tests Test suites under this directory should inherit base class TestFunctional | |

| interfaces | Tests related to PBS interfaces (IFL, TM, RM) Test suites under this directory should inherit base class TestInterfaces | |

| performance | Performance tests Test suites under this directory should inherit base class TestPerformance | |

| resilience | Server & comm failover tests Stress, load, and endurance tests Test suites under this directory should inherit base class TestResilience | |

| security | Security tests Test suites under this directory should inherit base class TestSecurity | |

| selftest | Testing PTL itself Test suites under this directory should inherit base class TestSelf | |

| upgrades | Upgrade-related tests Test suites under this directory should inherit base class TestUpgrades | |

Construction of PTL

PTL is derived from Python's unittest.

PTL uses nose plugins.

Testing PTL

Tests that test PTL are in pbspro/test/test/selftest, at https://github.com/PBSPro/pbspro/tree/master/test/tests/selftest

Enhancing PTL for New PBS Attributes

How to Add a New Attribute to the Library

This section is for PBS developers who may be adding a new job, queue, server, or vnode attribute, and need to write tests that depend on their new attribute. PTL does not automatically generate mappings from API to CLI, so when adding new attributes, it is the responsibility of the test writer to define the attribute conversion in ptl/lib/pbs_api_to_cli.py. They must also define the new attribute in ptl/lib/pbs_ifl_mock.py so that the attribute name can be dereferenced if the SWIG wrapping was not performed.

For example, let's assume we are introducing a new job attribute called ATTR_geometry that maps to the string "job_geometry". In order to be able to set this attribute for a job:

We need to define it in pbs_api_to_cli.py as follows:

ATTR_geometry: "W job_geometry="

Add it to ptl/lib/pbs_ifl_mock.py as follows:

ATTR_geometry: "job_geometry"

In order to get the API to use the new attribute, rerun pbs_swigify, so that symbols from pbs_ifl.h are read in

Nose Plugins for PTL

PTL nose plugins are written for test collection, selection, observation, and reporting. Below are the various PTL plugins:

PTLTestLoader: Load test cases from a given set of parameters

PTLTestRunner: Run tests

PTLTestInfo: Generate test suite and test case information

PTLTestDb: Update the PTL execution data into a given data base type

PTLTestData: Save post-analysis data on test case failure or error

PTLTestTags: Load test cases from a given set of parameters for tag names

Nose supports plugins for test collection, selection, observation, and reporting.

Plugins need to implement certain mandatory methods. Plugin (hooks) fall into four broad categories: selecting and loading tests, handling errors raised by tests, preparing objects used in the testing process, and watching and reporting on test results.

Some of the PTL plugins, with their purposes, are listed below:

PTLTestLoader

Load test cases from given parameters.

Based on the parameters provided while running. This plugin is necessary to load the required list of tests. It also checks for unknown test suites and test cases if any, and excludes any tests specified for exclusion.

PTLTestRunner

PTL plugin to run tests.

This plugin defines the following functionalities related to running tests:

Start or stop the test

Add error, success, or failure

Track test timeout

If post analysis is required, raise an exception when a test case failure threshold is reached

Log handler for capturing logs printed by the test case via logging module

Test result handling

PTLTestInfo

Load test cases from given parameters to get their information docstrings.

This plugin is used to generate test suite info, rather than running tests. This info includes test suite and test case docstrings in hierarchical order. It can be queried using test suite names or tags.

PTLTestDb

Update the PTL execution data into a given data base type such as File, HTML, etc.

This plugin is for uploading PTL data into a data base. PTL data includes all the information regarding a PTL execution such as test name, test suite name, PBS version tested on, hostname where the test is run, test start time, end time, duration, and test status (skipped, timed out, errored, failed, succeeded, etc.) PTL data can be uploaded to any type of database, including File, JSON, HTML, and PostgresSQL. The PTLTestDb plugin also defines a method to send analyzed PBS daemon log information either to the screen or to a database file, which is used with the pbs_loganalyzer test command.

PTLTestData

Save post analysis data on test case failure or error.

This plugin is for saving post analysis data on test case failure in PTL, along with tracking failures and handling test execution when failure thresholds are reached.

PTLTestTags

Load test cases from given parameters for tag names.

This plugin provides test tagging. It defines a Decorator function that adds tags to classes, functions, or methods, and loads the matching set of tests according to a given set of parameters.

Nose Documentation

http://nose.readthedocs.io/en/latest/testing.html

http://pythontesting.net/framework/nose/nose-introduction/

http://nose.readthedocs.io/en/latest/plugins/writing.html

Related Links and References

Complete Doxygenated PTL Documentation

http://www.pbspro.org/ptldocs/

Repository

https://github.com/PBSPro/pbspro

https://github.com/PBSPro/pbspro/test

Nose Documentation

http://nose.readthedocs.io/en/latest/testing.html

http://pythontesting.net/framework/nose/nose-introduction/

http://nose.readthedocs.io/en/latest/plugins/writing.html

Python Documentation

Writing PTL Tests

PTL Test Directory Structure

| Directory | Description of Contents | |

|---|---|---|

| tests | ||

| functional | Feature-specific tests Test suites under this directory should inherit base class TestFunctional | |

| interfaces | Tests related to PBS interfaces (IFL, TM, RM) Test suites under this directory should inherit base class TestInterfaces | |

| performance | Performance tests Test suites under this directory should inherit base class TestPerformance | |

| resilience | Server & comm failover tests Stress, load, and endurance tests Test suites under this directory should inherit base class TestResilience | |

| security | Security tests Test suites under this directory should inherit base class TestSecurity | |

| selftest | Testing PTL itself Test suites under this directory should inherit base class TestSelf | |

| upgrades | Upgrade-related tests Test suites under this directory should inherit base class TestUpgrades | |

PTL Test Naming Conventions

Test File Conventions

Each test file contains one test suite.

Name: pbs_<feature name>.py

- Start file name with pbs_ then use feature name

- Use only lower-case characters and the underscore (“_”) (this is the only special character allowed)

- Start comments inside filename with a single # (No triple/single quotes)

- No camel case needed in test file name

Examples: pbs_reservations.py, pbs_preemption.py

- Permission for file should be 0644

Test Suite Conventions

Each test suite is a Python class made up of tests. Each test is a method in the class.

Name: Test<Feature>

- Start name of test suite with with string “Test”

- Use unique, English-language explanatory name

- Use naming conventions for Python Class names (Camel case)

- Docstring is mandatory. This gives broad summary of tests in the suite

- Start comments with a single # (No triple/single quotes)

- Do not use ticket ID

Examples: TestReservations, TestNodesQueues

Test Case Conventions

Each test is a Python method and is a member of a Python class defining a test suite. A test is also called a test case.

Name: test_<test description>

- Start test name with "test_", then use all lower case alphanumeric for the test description

- Make name unique, accurate, & explanatory, but concise; can have multiple words if needed

- Docstring is mandatory. This gives summary of the whole test case

- Tagging is optional. Tag can be based on category in which the test belongs. Ex: :@tags('smoke') ### leading colon?

- Test case name need not include the feature name (as it will be part of the test suite anyways)

- Start comments with a single # (No triple/single quoted comments)

Examples: test_create_routing_queue, test_finished_jobs

Inherited Python Classes

PTL is derived from and inherits classes from the Python unittest unit testing framework.

PTL test suites are directly inherited from the unittest TestCase class.

Writing Your PTL Test

Main Parts of a PTL Test

You can think of a PTL test as having 3 parts:

- Setting up your environment

- Running your test

- Checking the results

Using Attributes

Many PTL commands take an attribute dictionary.

- This is of the form of {‘attr_name1’: ‘val1’, ‘attr_name2’: ‘val2’, …, ‘attr_nameN’: ‘valN’}

- To modify resources, use the ‘attr_name.resource_name’ form like ‘resources_available.ncpus’ or ‘Resource_List.ncpus’

- Many of the ‘attr_nameN’ can be replaced with its formal name like ATTR_o or ATTR_queue. A list of these can be found in the pbs_ifl.h C header file.

Setting Up Your Environment

The idea here is to create an environment which can run the test no matter what machine the test is being run on. You may need to create queues, nodes, or resources, or set attributes, etc.

First you need to set up your vnode(s). This is a required step because if you don’t, the natural vnode will be left as is. This means the vnode will have different resources depending on what machine the test is run on. This can be done in one of two ways:

- If you only need one vnode, you can modify the natural vnode.

- This is done by setting resources/attributes with self.server.manager(). You use self.server.manager(MGR_CMD_SET, VNODE, {attribute dictionary} id=self.mom.shortname)

- If you need more than one vnode, you create them with self.server.create_vnodes(attrib={attribute dictionary}, num=N, mom=self.mom)

After you set up your vnodes, you might need to set attributes on servers or queues or even create new queues or resources. This is all done via the self.server.manager() call.

Examples of Setting up Environment

- To create a queue named workq2:

- self.server.manager(MGR_CMD_CREATE, QUEUE, {attribute dictionary}, id=<name>)

- Similarly to create a resource:

- self.server.manager(MGR_CMD_CREATE, RSC, {‘type’: <type>, ‘flag’: <flags>}, id=<name>)

- To set a server attribute:

- self.server.manager(MGR_CMD_SET, SERVER, {attribute dictionary})

- To set a queue attribute:

- self.server.manager(MGR_CMD_SET, QUEUE, {attribute dictionary}, id=<queue name>)

Creating Your Test Workload

Usually to run a test you need to submit jobs or reservations. These are of the form:

- j = Job(<user>, {attribute dictionary})

OR

- r = Reservation(<user>, {attribute dictionary})

- <user> can be one of the test users that are created for PTL. A common user is TEST_USER.

The attribute dictionary usually consists of the resources (e.g. Resource_List.ncpus or Resource_List.select) and maybe other attributes like ATTR_o. To submit a job to another queue, use ATTR_queue.

This just creates a PTL job or reservation object. By default these jobs will sleep for 100 seconds and exit. To change the sleep time of a job, you do ‘j.set_sleep_time(N)’

Finally you submit your job/reservation.

- job_id = self.server.submit(j)

- resv_id = self.server.submit(r)

Many tests require more than one reservation. Follow the above steps multiple times for those.

Once you have submitted your job or reservation, you should check if it is in the correct state.

- self.server.expect(JOB, {ATTR_STATE: ‘R’, id=job_id)

- self.server.expect(RESV, {reserve_state’: (MATCH_RE, ‘RESV_CONFIRMED|2}) (don’t worry about this funny match, just use it).

As you are running your test, you should make sure most steps have correctly completed. This is mostly done through expect(). The expect() function will query PBS 60 times once every half seconds (total of 30 seconds) to see if the attributes are true. If after 60 attempts the attribute is still not true, a PtlExpectError exception will be raised.

Running Your Test

Checking Your Results

This is when you check to see if your test has correctly passed. To do this you will either use self.server.expect() as described above, log_match(), or the series of assert functions provided by unittest. The most useful asserts are self.assertTrue(), self.assertFalse(), self.assertEquals(). There are asserts for all the normal conditional operators (even the in operator). For example, self.assertGreater(a, b) tests a > b. Each of the asserts take a final argument that is a message which is printed if the assertion fails. The log_match() function is on each of the daemon objects (e.g. self.server, self.mom, self.scheduler, etc).

Examples of Checking Results

- self.server.expect(NODE, {‘state’: ‘offline’}, id=<name>)

- self.scheduler.log_match(‘Insufficient amount of resource’)

- self.assertTrue(a)

- self.assertEquals(a, b)

Adding PTL Test Tags

PTL test tags let you list or execute a category of similar or related test cases across test suites in test directories. To include a test case in a category, tag it with the “@tags(<tag_name>)” decorator. Tag names are case-insensitive.

See the pbs_benchpress page for how you can use tags to select tests.

Pre-defined PTL Test Tags

Tag Name | Description | |

|---|---|---|

1 | smoke | Tests related to basic features of PBS, such as job or reservation submission/execution/tracking, etc. |

2 | server | Test related to server features exclusively. Ex: server requests, receiving & sending job for execution etc. |

3 | sched | Tests related to scheduler exclusively. Ex: tests related to scheduler daemon, placement of jobs, implementation of scheduling policy etc. |

4 | mom | Tests related to mom, i.e. processing of jobs received from server and reporting back etc. Ex: Mom polling etc. |

5 | comm | Tests related to communication between server, scheduler and mom. |

6 | hooks | Tests related to server hooks or mom hooks |

7 | reservations | Tests related to reservations |

8 | configuration | Tests related to any PBS daemon configurations. |

9 | accounting | Tests related to accounting logs |

10 | scheduling_policy | Tests related to job scheduling policy of the scheduler - |

11 | multi_node | Tests involving more than one node complex |

12 | commands | Tests related to PBS commands and its outputs (Client related) |

13 | security | Tests related to authentication, authorisation etc. |

14 | windows | Tests that can run only on windows platform |

15 | cray | Tests that can run only on cray platform |

16 | cpuset | Tests that can run only on cpuset system |

Tagging Test Cases

Examples of tagging test cases:

All the test cases of pbs_smoketest.py are tagged with “smoke”.

>>>>>

@tags('smoke')

class SmokeTest(PBSTestSuite)

>>>>>

Multiple tags can be specified, as shown here:

>>>>>

@tags(‘smoke’, ’mom’, ’configuration’)

class Mom_fail_requeue(TestFunctional)

>>>>>

Using Tags to List Tests

Use the --tags-info option to list the test cases with a specific tag. For example, to find test cases tagged with "smoke":

pbs_benchpress --tags-info --tags=smoke

Finding Existing Tests

To find a test case of a particular feature:

Ex: Find a ASAP reservations test case

- Look for the appropriate directory – which is functional in this case

- Look for the existence of the relevant feature test suite file in this directory or run command to find test suites

ex. pbs_reservations.py

ex. pbs_benchpress -t TestFunctional -i

- Look for the test suite info to get doc string of test cases related to ASAP reservation

pbs_benchpress -t TestReservations -i –verbose

- All reservations tests can be listed as below

pbs_benchpress --tags-info--tags=reservations --verbose

The same command can be used to list tests inside the directories. Ex: All reservations tests inside performance directory

Placing New Tests in Correct Location

To add a new test case of a particular feature or bug fix:

Ex: A test case for a bug fix that updated accounting logs

- Look for the appropriate directory - which is functional in this case

- Look for the existence of the relevant feature test suite file or run command to list test suites of base class

ex. In Functional test directory any test file / test suites associated with “log”. If present, add test case into that test suite

ex. pbs_benchpress -t TestFunctional -i

- If test suite is not present, add a new test suite with name Test<Featurename> in file with name pbs_<featurename>.py

- Tag the new test case if necessary

If the test case seems to belong to any of the features listed in tag list, it can be tagged so.

Ex. @tags(‘accounting’)

Using Tags to Run Desired Tests

Use the --tags option to execute the test cases, including hierarchical tests, tagged with a specific tag. For example, to execute the test cases tagged with "smoke":

pbs_benchpress --tags=smoke

Ex: All scheduling_policy tests

- Look for the appropriate directory - which is functional in this case

- Look for that feature in tag list. If present, run with tag name as below:

pbs_benchpress --tags=scheduling_policy

- If no tags present then look for relevant test suite/s present, run the same

pbs_benchpress -t <suite names>