PP-316: PMIx Integration with PBS Pro

Jira ticket: PP-316 - Getting issue details... STATUS

This document conforms to the PBS Pro Design Document Guidelines

Forum discussion: https://community.openpbs.org/t/pmix-integration-with-pbs-pro/1841

Preface

This is an evolving document that will be modified over time as the project commences. The intent is to avoid multiple documents covering various phases of work that become disjoint over time and to retain the details in a single body of work. The overall structure of the design includes background information on PMIx that is necessary for the reader to understand. The various phases of the integration work are covered once this background data has been

Overview

The Process Management Interface (PMI) is responsible for managing processes associated with large scale parallel applications. It is a standard that is integrated into most MPI implementations. When a user wishes to run a parallel application, they must first submit a job to the resource manager (i.e. PBS Pro) expressing the requirements. The resource manager identifies a set of resources to allocate for the job and instantiates a shell process on behalf of the user that evaluates their job script. To execute a parallel application within a PBS Pro job the user must invoke a launcher such as mpirun. The launcher may utilize different techniques to instantiate processes on the other nodes. PBS Pro provides its own Task Manager (TM) for this purpose. PMI provides MPI with the information it needs to support communication between the individual ranks of the application.

PMIx is a new implementation of PMI targeted for exascale HPC systems. It has several advantages over previous implementations including performance and scalability. By integrating the PMIx capabilities with PBS Pro, users will be able to take advantage of the benefits PMIx provides.

Glossary

| Term | Definition |

|---|---|

| MPI | Message Passing Interface providing a means for communication between ranks of parallel applications |

| OMPI | OpenMPI |

| PMI | Process Management Interface (versions 1 and 2) |

| PMIx | Process Management Interface eXascale |

| PRRTE | PMIx Reference Runtime Environment |

| rank | A unique ordinal assigned by MPI to each component comprising a parallel application |

| RM | Resource Manager (e.g. PBS Pro, SLURM, etc.) |

| TM | PBS Pro Task Manager |

| WLM | Workload Manager (a.k.a. RM) |

PMIx Background

The PMI standard has evolved over time starting with PMI-1. The successor, PMI-2, addressed several shortcomings present in the initial version. PMIx is a new implementation that extends the capabilities of previous implementations while remaining backward compatible.

First and foremost, PMIx is a standard that is implemented as a library. It is not a daemon or standalone system service. Components including parallel programming tools and resource managers link against the PMIx library in order to fulfill their role in the overall scheme.

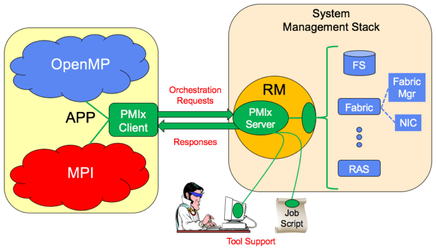

The overall idea is that a PMIx client (an application) interacts with the PMIx server (implemented within a resource manager such as PBS Professional) to perform operations on its behalf. The major benefits of PMIx are scalability, performance, and the variety of operations it supports (e.g. debugger support, queries, request and release, etc.).

Image courtesy of pmix.org

It is important to note that the PMIx server is not a standalone daemon, but a library. It is up to the resource manager to utilize the calls provided in the library to implement the server. In the case of PBS Pro, this will be implemented within the MoM. Each MoM assigned to a job must play the role of the local PMIx server. In the case of multinode jobs, the mother superior will instantiate the PMIx server for rank zero. Processes spawned on other nodes in the sisterhood (via the TM interface or pbs_attach) will also require an instance of the PMIx server with which to interact.

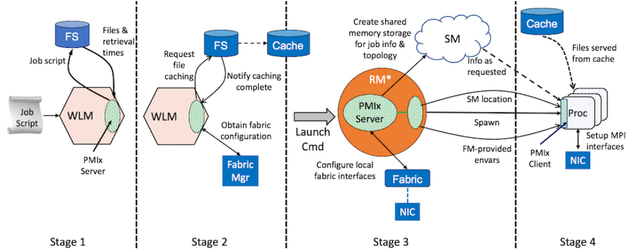

The diagram below illustrates the various phases involved in a typical application launch involving PMIx:

Image courtesy of pmix.org

The following section will outline how to get started with PMIx and PRRTE. They include instructions for building and installing each package, and running some basic examples.

Obtaining and Building PMIx

PMIx is housed on GitHub like many other opensource packages, and the steps to build it are fairly straightforward. The resulting build produces few binaries. The libraries and header files will be used later on.

Clone the PMIx repository.

$ git clone https://github.com/openpmix/openpmix.git

Build the source code using the version you prefer. In this case we will use the master branch (v4.0 at the time of writing).

$ cd openpmix $ ./autogen.pl [ output omitted ] $ ./configure --prefix=/opt/pmix --without-slurm --with-pmix-headers --disable-debug [ output omitted ] $ make V=1 [ output omitted, the V=1 argument tells GNU make to print the full output rather than just the file it is compiling ] $ sudo make install [ output omitted ]

The reason why the V=1 parameter is passed to make is because it becomes apparent the exact command line parameters used. This can be handy when it comes to compiling some of the example code later on.PMIx is now installed in /opt/pmix on your system.

$ /opt/pmix/bin/pmix_info [pbs-server:53900] pmix_mca_base_component_repository_open: "mca_pcompress_zlib" does not appear to be a valid pcompress MCA dynamic component (ignored): /opt/pmix/lib/pmix/mca_pcompress_zlib.so: undefined symbol: mca_pcompress_zlib_component. ret -1 Package: PMIx mike@pbs-server Distribution PMIX: 4.0.0a1 PMIX repo revision: gita943a1b PMIX release date: Mar 10, 2018 Prefix: /opt/pmix Configured architecture: pmix.arch Configure host: pbs-server Configured by: mike Configured on: Tue Mar 12 14:13:54 EDT 2019 Configure host: pbs-server Configure command line: '--prefix=/opt/pmix' '--with-devel-headers' '--disable-debug' [ remaining output omitted ]

The error coming from pmix_info appears to be due to some recent change to compression within PMIx. This error will likely disappear in time.

Obtaining and Building PRRTE

The point of PRRTE is to provide a reference implementation that mimics the behavior of a PMIx server. It provides a standalone daemon that may be used to run some simple examples. The intent is for PBS Professional to provide similar (yet more robust) functionality once integration is complete.

Clone the PRRTE repository.

$ git clone https://github.com/openpmix/prrte.git

Build the source. In this case we use the master branch because some recent changes have been checked in there. Note that this build is very similar to that of OpenMPI in terms of options because OpenMPI contains a significan portion of PMIx. You should already have PBS Professional installed in /opt/pbs for this to work.

$ cd prrte $ ./autogen.pl [ output omitted ] $ ./configure --prefix=/opt/prrte --with-pmix=/opt/pmix --with-tm=/opt/pbs --without-slurm --disable-debug [ output omitted ] $ make V=1 [ output omitted, the V=1 argument tells GNU make to print the full output rather than just the file it is compiling ] $ sudo make install [ output omitted ]

PRRTE is now installed in /opt/prrte on your system.

$ /opt/prrte/bin/prte_info Open RTE: 4.1.0a1 Open RTE repo revision: dev-30019-gcf924af Open RTE release date: Nov 24, 2018 Prefix: /opt/prrte Configured architecture: x86_64-unknown-linux-gnu Configure host: pbs-server Configured by: mike Configured on: Tue Mar 12 14:06:42 EDT 2019 Configure host: pbs-server Configure command line: '--prefix=/opt/prrte' '--with-pmix=/opt/pmix' '--with-tm=/opt/pbs' '--without-slurm' '--disable-debug'

Running Applications with PRRTE

There is a sample server implementation together with some client applications housed with the PRRTE source code in the "examples" directory. We will start where we left off, in the top-level PRRTE source code directory.

Adjust your PATH and compile the examples.

$ PATH=$PATH:/opt/prrte/bin $ cd examples $ make pcc -g client.c -o client pcc -g client2.c -o client2 pcc -g debugger/direct.c -o debugger/direct pcc -g debugger/indirect.c -o debugger/indirect pcc -g debugger/attach.c -o debugger/attach pcc -g debugger/daemon.c -o debugger/daemon pcc -g debugger/hello.c -o debugger/hello pcc -g dmodex.c -o dmodex pcc -g dynamic.c -o dynamic pcc -g fault.c -o fault pcc -g pub.c -o pub pcc -g tool.c -o tool pcc -g alloc.c -o alloc pcc -g probe.c -o probe pcc -g target.c -o target pcc -g hello.c -o hello pcc -g log.c -o log pcc -g bad_exit.c -o bad_exit pcc -g jctrl.c -o jctrl pcc -g launcher.c -o launcher $

Start the local PRRTE server. When PRRTE was installed, a binary named "prte" was added to /opt/prrte/bin. Since that directory is now in our path, it is easy to start the local daemon. In this case, we'll start the daemon in debug mode to obtain some additional output.

$ prte -d [pbs-server:90553] procdir: /tmp/ompi.pbs-server.1000/dvm/0/0 [pbs-server:90553] jobdir: /tmp/ompi.pbs-server.1000/dvm/0 [pbs-server:90553] top: /tmp/ompi.pbs-server.1000/dvm [pbs-server:90553] top: /tmp/ompi.pbs-server.1000 [pbs-server:90553] tmp: /tmp [pbs-server:90553] sess_dir_cleanup: job session dir does not exist [pbs-server:90553] sess_dir_cleanup: top session dir does not exist [pbs-server:90553] procdir: /tmp/ompi.pbs-server.1000/dvm/0/0 [pbs-server:90553] jobdir: /tmp/ompi.pbs-server.1000/dvm/0 [pbs-server:90553] top: /tmp/ompi.pbs-server.1000/dvm [pbs-server:90553] top: /tmp/ompi.pbs-server.1000 [pbs-server:90553] tmp: /tmp

The daemon will continue running in the session where it was started. The client commands will be launched from a new bash session. We will need to update our path in this new session, and then launch some sample clients.

$ cd prrte/examples/ $ PATH=$PATH:/opt/prrte/bin $ prun -n 2 ./hello Client ns 3548315651 rank 0 pid 90736: Running on host pbs-server localrank 0 Client ns 3548315651 rank 0: Finalizing Client ns 3548315651 rank 1 pid 90737: Running on host pbs-server localrank 1 Client ns 3548315651 rank 1: Finalizing Client ns 3548315651 rank 0:PMIx_Finalize successfully completed Client ns 3548315651 rank 1:PMIx_Finalize successfully completed $ prun -n 2 ./client Client ns 3548315653 rank 0 pid 90745: Running Client 3548315653:0 universe size 2 Client 3548315653:0 num procs 2 Client ns 3548315653 rank 1 pid 90746: Running Client 3548315653:1 universe size 2 Client 3548315653:1 num procs 2 Client ns 3548315653 rank 0: PMIx_Get 3548315653-0-local returned correct Client ns 3548315653 rank 0: PMIx_Get 3548315653-0-remote returned correct Client ns 3548315653 rank 0: PMIx_Get 3548315653-0-local returned correct Client ns 3548315653 rank 1: PMIx_Get 3548315653-1-local returned correct Client ns 3548315653 rank 1: PMIx_Get 3548315653-1-remote returned correct Client ns 3548315653 rank 1: PMIx_Get 3548315653-1-local returned correct Client ns 3548315653 rank 0: PMIx_Get 3548315653-0-remote returned correct Client ns 3548315653 rank 0: Finalizing Client ns 3548315653 rank 1: PMIx_Get 3548315653-1-remote returned correct Client ns 3548315653 rank 1: Finalizing Client ns 3548315653 rank 0:PMIx_Finalize successfully completed Client ns 3548315653 rank 1:PMIx_Finalize successfully completed $ prun -n 2 ./client2 Client ns 3548315655 rank 0: Running Client ns 3548315655 rank 1: Running Client 3548315655:1 job size 2 Client 3548315655:0 job size 2 Client ns 3548315655 rank 1: Finalizing Client ns 3548315655 rank 0: Finalizing Client ns 3548315655 rank 1:PMIx_Finalize successfully completed Client ns 3548315655 rank 0:PMIx_Finalize successfully completed

Meanwhile, back on the server session we are also seeing output...[pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] [[54143,0],0] Releasing job data for [54143,1] [pbs-server:90553] [[54143,0],0] Releasing job data for [INVALID] [pbs-server:90553] [[54143,0],0] Releasing job data for [INVALID] [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] [[54143,0],0] Releasing job data for [54143,2] [pbs-server:90553] [[54143,0],0] Releasing job data for [54143,3] [pbs-server:90553] [[54143,0],0] Releasing job data for [INVALID] [pbs-server:90553] [[54143,0],0] Releasing job data for [INVALID] [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] [[54143,0],0] Releasing job data for [54143,4] [pbs-server:90553] [[54143,0],0] Releasing job data for [54143,5] [pbs-server:90553] [[54143,0],0] Releasing job data for [INVALID] [pbs-server:90553] [[54143,0],0] Releasing job data for [INVALID] [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: proc session dir does not exist [pbs-server:90553] sess_dir_finalize: job session dir does not exist [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: jobfam session dir not empty - leaving [pbs-server:90553] sess_dir_finalize: top session dir not empty - leaving [pbs-server:90553] [[54143,0],0] Releasing job data for [54143,6] [pbs-server:90553] [[54143,0],0] Releasing job data for [54143,7]

Integration

The remainder of this document covers the various phases of PMIx integration with PBS Pro. Each phase will contain tasks that may be apportioned to support an Agile development methodology.

Phase 1 - Framework and wire-up

Integration of PMIx with PBS Pro will require significant amounts of code to be added to the repository. The goal is to organize the new code in a modular way, taking a "minimally invasive" approach to existing code. While PMIx is evolving in ways that could impact the PBS Pro scheduler, all of the changes for the foreseeable future will impact pbs_mom and will be limited to the src/resmom section of the code.

To isolate the PMIx changes, a new C preprocessor macro named "PMIX" will be added. This macro will be defined when the "--with-pmix" parameter is passed as an argument to the configure script at build time. Code that relates specifically to PMIx integration may be isolated by enclosing it in C preprocessor blocks encapsulated by "#ifdef PMIX", already a common practice in PBS Pro source code for other extensions. This makes PMIx specific changes obvious when they must be interspersed with existing PBS Pro code.

If the "--with-pmix" parameter is not provided to configure, PBS Pro will behave exactly as it would if no PMIx code existed in the repository. The last sentence is in bold to call attention because this has the effect of being able to carry on integration work without impacting other aspects of PBS Pro behavior. This is a model we should consider adopting more in the future as it provides a mechanism to develop and test new features (especially large features) in lock step with ongoing development and bug fixes.

The vast majority of PMIx integration code will be contained within the src/resmom/mom_pmix.c file. An accompanying src/resmom/mom_pmix.h file will be provided containing prototypes for exposed functions called in other areas of the pbs_mom code. The vast majority of functions within mom_pmix.c will be declared as static and have no external visibility.

The PMIx server is initialized by making a call to PMIx_server_init() and passing it a PMIx server module data type. The PMIx server module contains a number of function pointers that have are initialized to point to functions defined within mom_pmix.c. These functions are responsible for carrying out the various operations the PMIx specification provides.

A long-term goal is to provide a single pbs_mom binary that utilizes dlopen() to dynamically load the PMIx library functions at runtime. This goal has been deferred for phase 1 of the project. It is currently unknown whether this will be possible given the organization of the PMIx library. For the time being, the PMIx library will be dynamically linked against the pbs_mom binary at link time. This requires that the PMIx library be installed on systems where pbs_mom has been built with PMIx integration enabled.

Wire-up will be supported for the first phase of PMIx integration. This means that any task spawned via the PBS Pro Task Manager (TM) interface will be capable of interacting with the local PMIx server. To support wire-up PBS must initialize the PMIx server, register the namespace for the job, and populate the environment of the task when it is spawned. This information in the environment allows a PMIx client to connect and interact with the PMIx server.

Initialization of the PMIx server is accomplished with a call to PMIx_server_init() shortly after pbs_mom starts. A call to PMIx_server_finalize() should be made prior to exit.

When a job is started by PBS, the initial shell or binary the user has specified will NOT be provided wire-up data in the environment. Doing so would mean the qsub command would be acting as a application launcher and have to support several additional parameters. The wire-up data is only supplied to tasks spawned by the job using the TM interface. Initially, pbsdsh may be used to spawn processes for unit testing. A formal launcher will be introduced in a later development phase, eventually supporting multi-application launch.

During initialization of a job, pbs_mom must register the PMIx namespace by calling PMIx_server_register_nspace(). When a job is complete, pbs_mom must deregister the namespace with a call to PMIx_server_deregister_nspace(). The handle for the namespace is accessible in the environment of spawned tasks so they may identify themselves to the PMIx server.

A running PBS job may contain multiple clients (PMIx enabled applications). Each client must be registered with the server upon launch. This is done by calling PMIx_server_register_client(), followed by a call to PMIx_server_setup_fork(). The former registers the process with the PMIx namespace that was setup during initialization of the PBS job, and the latter populates the environment of the new process to include PMIx specific data allowing the client to interact with the PMIx server.

The result of phase one will be a basic framework for adding code in subsequent phases. From a functional standpoint, the contents of the environment may be verified by using pbsdsh to invoke the env command.

Phase 2 - Support basic PMIx operations

The second phase of PMIx integration will require the support for addition PMIx operations including GET, PUT, ABORT, and FENCE. The callbacks for these operations may be easily added to the framework created in phase one.

Supporting the FENCE operation allows a PMIx enabled application to execute a barrier. It relies on the presence of a generic collective management facility to function properly. A collective operation involves one or more clients where all clients must be synchronized before progress is allowed to continue. Once synchronized, all clients must be notified the barrier has been satisfied. A basic approach will be taken whereby mother superior will act as the collective manager. Clients will inform mother superior of their need to synchronize via an all-to-one mechanism. Once all required members have synchronized, the barrier is satisfied and a one-to-all mechanism will be used to inform all members they may proceed.

The remaining operations targeted for this phase will be documented prior to implementation, but will not require as much effort or infrastructure as FENCE.

Functionality in this phase may be validated via custom PMIx clients. Several custom clients are provided in the PMIx examples directory and may be customized as required. Some MPI based testing may begin in this phase, but is not compulsory.

Phase 3 - Client launcher and MPI integration

The third phase will focus on development of a PMIx application launcher. One proposed name for this launcher is "qlaunch", though other names may be considered. The launcher will initially be responsible for spawning individual applications, and may later be enhanced to spawn multiple applications simultaneously. PMIx provides numerous capabilities when it comes to describing and spawning applications, and the launcher should support a reasonable subset of those available.

Additional testing with multiple flavors of MPI will be required. PMIx integration will be considered fully functional at this point, and should be tested, documented, and marketed as such.

Phase 4 - Event notification support and optimization

PMIx provides the ability for clients to register for and generate events. This is an advanced capability currently unsupported by other resource managers. PBS is targeting the support for PMIx events in the fourth phase of development.

This phase may also entail work on optimization of the collective framework to support FENCE and other operations.

References

- https://pmix.org/

- https://github.com/pmix/pmix/wiki

- https://github.com/pmix/publications (numerous)

- https://www.open-mpi.org/papers/sc-2015-pmix/PMIx-BoF.pdf

- http://www.mcs.anl.gov/papers/P1760.pdf

- https://wiki.mpich.org/mpich/index.php/PMI_v2_API

Project Documentation Main Page